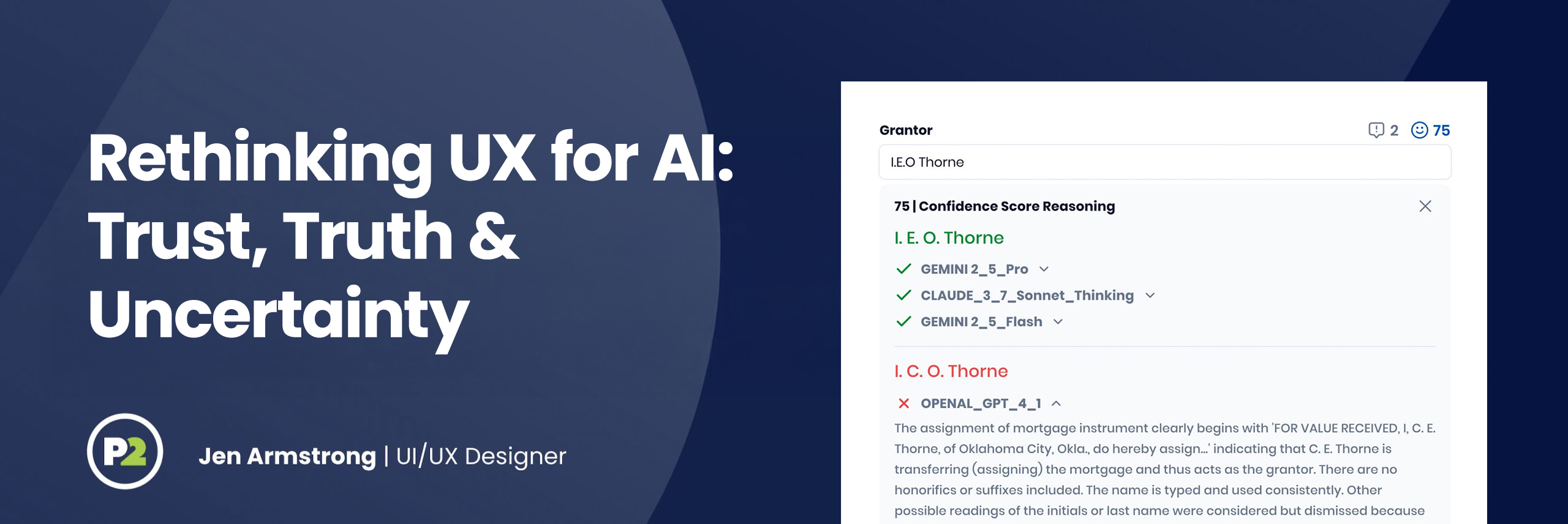

Rethinking UX for AI: Trust, Truth, and Uncertainty

[vc_row full_screen_section_height="no"][vc_column offset="vc_hidden-sm vc_hidden-xs"][vc_column_text css=""] AI introduces new interaction patterns—and with them, new uncertainties. These systems are non-deterministic. That means even when a user asks the same question twice, the answers might not match. So what does it look like to design for that kind of unpredictability? How do we help users spot AI mistakes—without tanking their trust? One of the biggest UX challenges right now is figuring out how to acknowledge that AI gets things wrong—sometimes confidently wrong—without undermining the entire experience. Some...

Read Post