Flaky Tests on Android with Espresso: Handling Network Calls in Android UI Testing

Espresso is a user interface testing kit that allows Android developers to simulate user interactions with their application and verify the results of those interactions. This kit helps ensure your app’s very basic regression testing functions without bothering a QA team. However, there is a much greater potential of running into flaky tests when writing integration or user interface tests.

Flaky tests pass or fail inconsistently. Flaky tests exist because the test design contains something not accounted for. It isn’t always possible or practical to account for all inconsistencies in a test, but flaky tests are undesirable. If you’re using a CI/CD pipeline to deploy your application, you will have to attempt the build multiple times hoping that no flaky tests fail, preventing the build. This can lead to a lot of wasted time and money.

Sample Code

If you would like to see the code used to demonstrate these concepts, clone this repository.

A Flaky Test & Network Calls

Tests can be flaky for a few reasons. One of the major sources of flakiness is when UI events are reliant on network calls. UI events reliant on network calls can take varying amounts of time. As an example, the test may attempt to access a button or a list item that isn’t available yet. This is because it is awaiting a response from a test server.

@Test(expected=androidx.test.espresso.NoMatchingViewException::class)

fun flakeyTest() {

onView(withId(R.id.button_first)).perform(click())

onView(withId(R.id.button_second)).perform(click())

onView(withId(R.id.button_first)).check(matches(isDisplayed()))

} The test above switches between two fragments in an activity. The click of the first button reveals the second button. The click of the second button reveals the first. We then verify that the first button is visible. As part of the app’s code, the R.id.button_second isn’t enabled until a small “network call” is completed. In the sample project, the simulated network call prevents the test from performing a click event on the button. It is disabled. The test attempts to verify it has moved back to the other fragment. It does this by performing a check for the first button, but it isn’t there.

Bad Solutions

The incorrect way of handling this is to guess the amount of time needed to wait for another thread or asynchronous function to return its data to the UI Thread. Something like thread.sleep(3000) would be needed before every single UI event that relies on a network call somewhere in the background. Suppose that after years of working on an app for a client, you have acquired over two hundred UI tests and a thousand unit tests. This could easily cause your builds to take two hours to complete, and that isn’t acceptable.

@Test

fun badTest() {

onView(withId(R.id.button_first)).perform(click())

Thread.sleep(3000)

onView(withId(R.id.button_second)).perform(click())

onView(withId(R.id.button_first)).check(matches(isDisplayed()))

}An even worse solution would be to exclude the test from the build pipeline by annotating it as @flaky and then configuring the build to ignore that annotation. This requires developers to run their tests locally a few times to see if the test passes at all. It can lead to the obfuscation of real problems in the application.

Idling Resources

A better way may be to take advantage of Idling Resources. If your project uses RxJava, you may need to do some work to integrate them. Square has provided the RxIdler library integrating RxKotlin and Idling Resources.

A quick and dirty implementation of the Idling Resources solution used RxIdler to wrap the RxJava Schedulers in an Idling Resource. There’s a major issue with using this solution. You’ll need to find a way to inject the RxIdler schedulers back into your application code. The Android developer Idling Resources article provides few suggestions for how one can do this. I exposed the schedulers through the activity, so they can be accessed dynamically and replaced with the RxIdler wrapped schedulers.

@Test

fun idleResourcesTest() {

rule.scenario.onActivity {

activity ->

RxJavaPlugins.setInitComputationSchedulerHandler(

Rx2Idler.create("RxJava 2.x Computation Scheduler"));

RxJavaPlugins.setInitIoSchedulerHandler(

Rx2Idler.create("RxJava 2.x IO Scheduler"));

val ioScheduler = Rx2Idler.wrap(activity.schedulers.iOScheduler, "io Scheduler")

IdlingRegistry.getInstance().register(ioScheduler)

val mainThreadScheduler = Rx2Idler.wrap(activity.schedulers.mainThreadScheduler, "main Thread Scheduler")

IdlingRegistry.getInstance().register(mainThreadScheduler)

activity.schedulers.iOScheduler = ioScheduler

activity.schedulers.mainThreadScheduler = mainThreadScheduler

}

onView(withId(R.id.button_first)).perform(click())

onView(withId(R.id.button_second)).perform(click())

onView(withId(R.id.button_first)).check(matches(isDisplayed()))

}The above code sets up the RxIdler wrappers and registers them in the Idling Resource Registry. This allows espresso to time its call around whether the schedulers are in an Idling state or not. When the wrapped schedulers get reassigned to the main activity, the simulated network call can use them.

A Simple Solution

If you aren’t ready to commit to Idling Resources try the following pattern.

fun onViewEnabled(viewMatcher: Matcher<View>): ViewInteraction {

val isEnabled: ()->Boolean = {

var isDisplayed = false

try {

onView(viewMatcher).check(matches((ViewMatchers.isEnabled())))

isDisplayed = true

}

catch (e: AssertionFailedError) { isDisplayed = false }

isDisplayed

}

for (x in 0..9) {

Thread.sleep(400)

if (isEnabled()) {

break

}

}

return Espresso.onView(viewMatcher)

}One simple solution is to use a view matcher that rapidly checks for the desired conditions before moving forward in the test. The onViewEnabled function simply checks if the UI element being queried is enabled. If not enabled, it will use a small amount of time and check again. This still uses Thread.sleep() but in smaller increments so that the difference between the amount of time needed versus the amount of time spent is minimal. The view matcher is inserted into the code below.

@Test

fun idleViewMatcherTest() {

onView(withId(R.id.button_first)).perform(click())

onViewEnabled(withId(R.id.button_second)).perform(click())

onView(withId(R.id.button_first)).check(matches(isDisplayed()))

}We have taken a bad solution, which is using Thread.sleeps in the application and made it less arbitrary. The smaller increments being added to the sleeps means that the amount of time waiting will be closer to the actual amount of time that is required, producing much less waste.

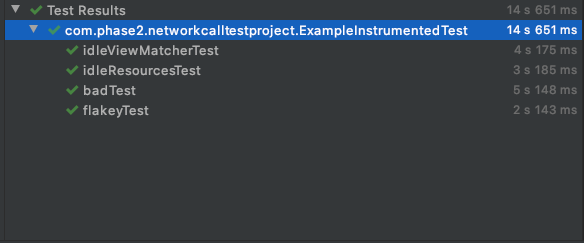

As you can see above, the most performant example of this test actually passing (excluding the flakeyTest, which is expected to fail) is the idleResourceTest. The awaiting view matcher pattern saves about a second at this scale, and the arbitrary sleep placed in the test performed the worst. You should be using Idling Resources, but if you aren’t able to, awaiting a view matcher may be easy enough to implement and get more consistency.

Phase 2 relies on automated testing to produce high-quality software for its clients. Many of our applications use automated UI testing so that we can save our clients time and money testing standard use cases.