Rethinking UX for AI: Trust, Truth, and Uncertainty

AI introduces new interaction patterns—and with them, new uncertainties. These systems are non-deterministic. That means even when a user asks the same question twice, the answers might not match. So what does it look like to design for that kind of unpredictability?

How do we help users spot AI mistakes—without tanking their trust?

One of the biggest UX challenges right now is figuring out how to acknowledge that AI gets things wrong—sometimes confidently wrong—without undermining the entire experience.

Some evolving approaches:

-

- Confidence indicators: Simple cues like “likely accurate” or “low confidence” help set expectations. They don’t need to be obtrusive—they just give people a feel for how much they should trust what they’re seeing.

- Inline disclaimers: Lightweight reminders like “AI-generated summary. May contain errors.” These can appear subtly, but go a long way toward managing assumptions.

- Error recovery options: Think “flag this,” “regenerate,” or “edit manually.” These aren’t just nice-to-haves—they’re safety nets that let users recover from a weird result instead of feeling stuck.

- Human-sounding hedges: Train the AI to use language like “based on available information, here’s my best take…” This signals openness without triggering alarm bells.

- Expose provenance: Let users trace AI outputs back to their sources. Showing where a piece of information came from helps establish credibility—and gives users something to fall back on if they need to verify it.

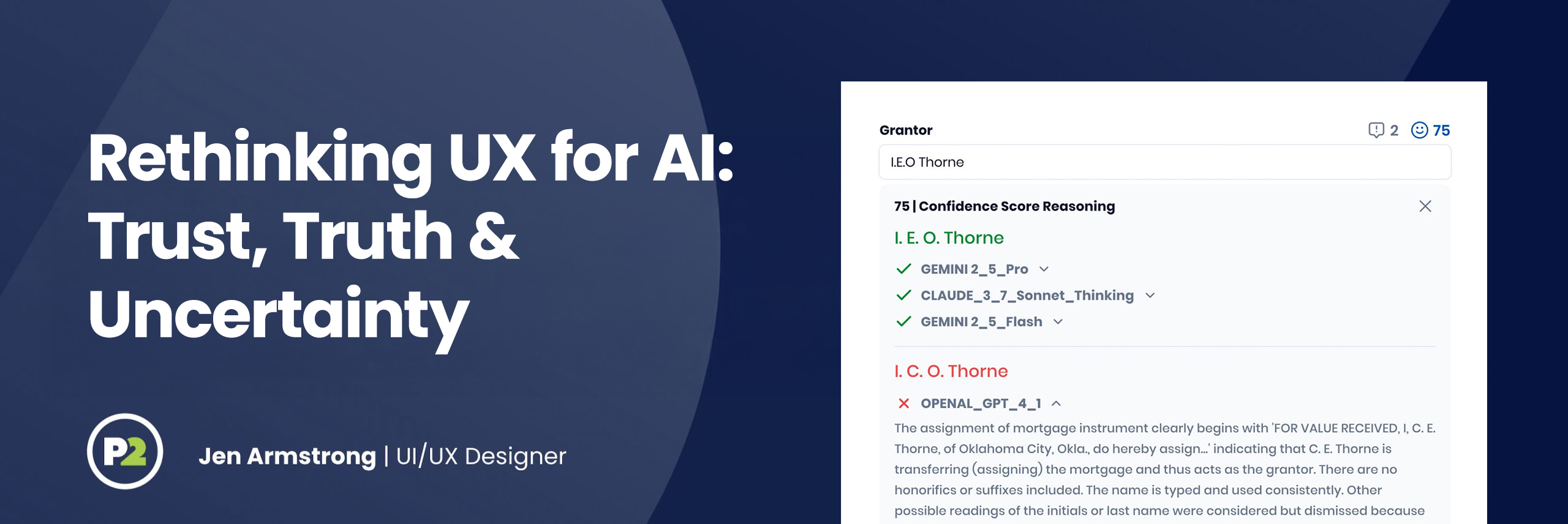

- Case study example: In a legal tool we designed to summarize 40-page deeds, we anchored every AI output to its original clause, offered source previews on click, and color-coded confidence levels. The UX didn’t just present a summary—it made trust visible.

This balancing act—being honest about limitations without spooking the user—is going to be a defining challenge of AI UX.

What does ‘truth’ even mean in an AI interface?

AI doesn’t always return the answer. It often returns an answer.

Our responsibilities now include:

-

- Setting expectations early (“This AI is experimental” goes a long way)

- Providing clarity behind the curtain (“Here’s why it made that suggestion”)

- Making room for user corrections (“Let me try that again”)

- Designing for human override: AI shouldn’t be the final word. Interfaces should listen back and create space for human intervention—especially in high-stakes situations.

We can’t make AI perfectly accurate or totally predictable—but we can design how it feels to use, question, and recover from it.

Final Thoughts

AI is making UX more essential—not less. And not just in the way we craft interfaces, but in how we help users navigate a world where not everything has a clear answer.

The best AI products won’t just be smart—they’ll be human-aware. They’ll give people tools to explore, to question, and to feel safe in the ambiguity. That’s our job now: to design not just what works, but what makes sense when things don’t.

These aren’t just controls—they’re anchors that help users shape what the system gives them.

UX as Sensemaking

More than ever, designers are playing the role of interpreters. We’re creating not just interfaces, but scaffolding—context, clues, and guardrails that help people make sense of AI-driven experiences.

Our responsibilities now include:

-

- Setting expectations early (“This AI is experimental” goes a long way)

- Providing clarity behind the curtain (“Here’s why it made that suggestion”)

- Making room for user corrections (“Let me try that again”)

- Designing for human override: AI shouldn’t be the final word. Interfaces should listen back and create space for human intervention—especially in high-stakes situations.

We can’t make AI perfectly accurate or totally predictable—but we can design how it feels to use, question, and recover from it.

Final Thoughts

AI is making UX more essential—not less. And not just in the way we craft interfaces, but in how we help users navigate a world where not everything has a clear answer.

The best AI products won’t just be smart—they’ll be human-aware. They’ll give people tools to explore, to question, and to feel safe in the ambiguity. That’s our job now: to design not just what works, but what makes sense when things don’t.

[/vc_column_text][/vc_column][/vc_row]

So what replaces filters?

-

- Creativity sliders (aka temperature settings): These let users choose how bold or safe they want the output to be. Lower settings produce more predictable responses; higher ones get weird—in a good way.

- Sentiment filters: These let users influence the emotional tone of the content. Want something more uplifting? More neutral? These nudges let people tune the vibe.

- Intent toggles: Handy buttons like “summarize,” “expand,” or “rewrite” give structure to a conversation that could otherwise go in a million directions.

These aren’t just controls—they’re anchors that help users shape what the system gives them.

UX as Sensemaking

More than ever, designers are playing the role of interpreters. We’re creating not just interfaces, but scaffolding—context, clues, and guardrails that help people make sense of AI-driven experiences.

Our responsibilities now include:

-

- Setting expectations early (“This AI is experimental” goes a long way)

- Providing clarity behind the curtain (“Here’s why it made that suggestion”)

- Making room for user corrections (“Let me try that again”)

- Designing for human override: AI shouldn’t be the final word. Interfaces should listen back and create space for human intervention—especially in high-stakes situations.

We can’t make AI perfectly accurate or totally predictable—but we can design how it feels to use, question, and recover from it.

Final Thoughts

AI is making UX more essential—not less. And not just in the way we craft interfaces, but in how we help users navigate a world where not everything has a clear answer.

The best AI products won’t just be smart—they’ll be human-aware. They’ll give people tools to explore, to question, and to feel safe in the ambiguity. That’s our job now: to design not just what works, but what makes sense when things don’t.

[/vc_column_text][/vc_column][/vc_row]

In a world where truth is often fuzzy or context-dependent, UX needs to focus more on transparency and user agency than absolutes.

How do we rethink filters when the content doesn’t already exist?

Traditional filters help users narrow down search results. But with AI, we’re often generating something entirely new. There is no dataset to sort through.

So what replaces filters?

-

- Creativity sliders (aka temperature settings): These let users choose how bold or safe they want the output to be. Lower settings produce more predictable responses; higher ones get weird—in a good way.

- Sentiment filters: These let users influence the emotional tone of the content. Want something more uplifting? More neutral? These nudges let people tune the vibe.

- Intent toggles: Handy buttons like “summarize,” “expand,” or “rewrite” give structure to a conversation that could otherwise go in a million directions.

These aren’t just controls—they’re anchors that help users shape what the system gives them.

UX as Sensemaking

More than ever, designers are playing the role of interpreters. We’re creating not just interfaces, but scaffolding—context, clues, and guardrails that help people make sense of AI-driven experiences.

Our responsibilities now include:

-

- Setting expectations early (“This AI is experimental” goes a long way)

- Providing clarity behind the curtain (“Here’s why it made that suggestion”)

- Making room for user corrections (“Let me try that again”)

- Designing for human override: AI shouldn’t be the final word. Interfaces should listen back and create space for human intervention—especially in high-stakes situations.

We can’t make AI perfectly accurate or totally predictable—but we can design how it feels to use, question, and recover from it.

Final Thoughts

AI is making UX more essential—not less. And not just in the way we craft interfaces, but in how we help users navigate a world where not everything has a clear answer.

The best AI products won’t just be smart—they’ll be human-aware. They’ll give people tools to explore, to question, and to feel safe in the ambiguity. That’s our job now: to design not just what works, but what makes sense when things don’t.

[/vc_column_text][/vc_column][/vc_row]

In a world where truth is often fuzzy or context-dependent, UX needs to focus more on transparency and user agency than absolutes.

How do we rethink filters when the content doesn’t already exist?

Traditional filters help users narrow down search results. But with AI, we’re often generating something entirely new. There is no dataset to sort through.

So what replaces filters?

-

- Creativity sliders (aka temperature settings): These let users choose how bold or safe they want the output to be. Lower settings produce more predictable responses; higher ones get weird—in a good way.

- Sentiment filters: These let users influence the emotional tone of the content. Want something more uplifting? More neutral? These nudges let people tune the vibe.

- Intent toggles: Handy buttons like “summarize,” “expand,” or “rewrite” give structure to a conversation that could otherwise go in a million directions.

These aren’t just controls—they’re anchors that help users shape what the system gives them.

UX as Sensemaking

More than ever, designers are playing the role of interpreters. We’re creating not just interfaces, but scaffolding—context, clues, and guardrails that help people make sense of AI-driven experiences.

Our responsibilities now include:

-

- Setting expectations early (“This AI is experimental” goes a long way)

- Providing clarity behind the curtain (“Here’s why it made that suggestion”)

- Making room for user corrections (“Let me try that again”)

- Designing for human override: AI shouldn’t be the final word. Interfaces should listen back and create space for human intervention—especially in high-stakes situations.

We can’t make AI perfectly accurate or totally predictable—but we can design how it feels to use, question, and recover from it.

Final Thoughts

AI is making UX more essential—not less. And not just in the way we craft interfaces, but in how we help users navigate a world where not everything has a clear answer.

The best AI products won’t just be smart—they’ll be human-aware. They’ll give people tools to explore, to question, and to feel safe in the ambiguity. That’s our job now: to design not just what works, but what makes sense when things don’t.

[/vc_column_text][/vc_column][/vc_row]

So how do we design for that shift?-

- Show multiple responses: Offering a few interpretations or answers encourages curiosity and communicates that there isn’t always one “right” path.

- Pull back the curtain: Reveal citations, data sources, or even step-by-step reasoning when possible. Giving users a peek into how the answer was formed builds credibility.

- Let users guide the shape of the answer: Small inputs like “make it shorter,” “be more optimistic,” or “use casual tone” allow people to tailor the experience—and feel more in control.

In a world where truth is often fuzzy or context-dependent, UX needs to focus more on transparency and user agency than absolutes.

How do we rethink filters when the content doesn’t already exist?

Traditional filters help users narrow down search results. But with AI, we’re often generating something entirely new. There is no dataset to sort through.

So what replaces filters?

-

- Creativity sliders (aka temperature settings): These let users choose how bold or safe they want the output to be. Lower settings produce more predictable responses; higher ones get weird—in a good way.

- Sentiment filters: These let users influence the emotional tone of the content. Want something more uplifting? More neutral? These nudges let people tune the vibe.

- Intent toggles: Handy buttons like “summarize,” “expand,” or “rewrite” give structure to a conversation that could otherwise go in a million directions.

These aren’t just controls—they’re anchors that help users shape what the system gives them.

UX as Sensemaking

More than ever, designers are playing the role of interpreters. We’re creating not just interfaces, but scaffolding—context, clues, and guardrails that help people make sense of AI-driven experiences.

Our responsibilities now include:

-

- Setting expectations early (“This AI is experimental” goes a long way)

- Providing clarity behind the curtain (“Here’s why it made that suggestion”)

- Making room for user corrections (“Let me try that again”)

- Designing for human override: AI shouldn’t be the final word. Interfaces should listen back and create space for human intervention—especially in high-stakes situations.

We can’t make AI perfectly accurate or totally predictable—but we can design how it feels to use, question, and recover from it.

Final Thoughts

AI is making UX more essential—not less. And not just in the way we craft interfaces, but in how we help users navigate a world where not everything has a clear answer.

The best AI products won’t just be smart—they’ll be human-aware. They’ll give people tools to explore, to question, and to feel safe in the ambiguity. That’s our job now: to design not just what works, but what makes sense when things don’t.

[/vc_column_text][/vc_column][/vc_row]

So how do we design for that shift?

-

- Show multiple responses: Offering a few interpretations or answers encourages curiosity and communicates that there isn’t always one “right” path.

- Pull back the curtain: Reveal citations, data sources, or even step-by-step reasoning when possible. Giving users a peek into how the answer was formed builds credibility.

- Let users guide the shape of the answer: Small inputs like “make it shorter,” “be more optimistic,” or “use casual tone” allow people to tailor the experience—and feel more in control.

In a world where truth is often fuzzy or context-dependent, UX needs to focus more on transparency and user agency than absolutes.

How do we rethink filters when the content doesn’t already exist?

Traditional filters help users narrow down search results. But with AI, we’re often generating something entirely new. There is no dataset to sort through.

So what replaces filters?

-

- Creativity sliders (aka temperature settings): These let users choose how bold or safe they want the output to be. Lower settings produce more predictable responses; higher ones get weird—in a good way.

- Sentiment filters: These let users influence the emotional tone of the content. Want something more uplifting? More neutral? These nudges let people tune the vibe.

- Intent toggles: Handy buttons like “summarize,” “expand,” or “rewrite” give structure to a conversation that could otherwise go in a million directions.

These aren’t just controls—they’re anchors that help users shape what the system gives them.

UX as Sensemaking

More than ever, designers are playing the role of interpreters. We’re creating not just interfaces, but scaffolding—context, clues, and guardrails that help people make sense of AI-driven experiences.

Our responsibilities now include:

-

- Setting expectations early (“This AI is experimental” goes a long way)

- Providing clarity behind the curtain (“Here’s why it made that suggestion”)

- Making room for user corrections (“Let me try that again”)

- Designing for human override: AI shouldn’t be the final word. Interfaces should listen back and create space for human intervention—especially in high-stakes situations.

We can’t make AI perfectly accurate or totally predictable—but we can design how it feels to use, question, and recover from it.

Final Thoughts

AI is making UX more essential—not less. And not just in the way we craft interfaces, but in how we help users navigate a world where not everything has a clear answer.

The best AI products won’t just be smart—they’ll be human-aware. They’ll give people tools to explore, to question, and to feel safe in the ambiguity. That’s our job now: to design not just what works, but what makes sense when things don’t.

[/vc_column_text][/vc_column][/vc_row]