Overview

Introduction

There’s been a lot of excitement lately in our P2 Labs team about the possibilities opened up by Anthropic’s Model Context Protocol, which enables AI tools to connect to a rapidly growing range of external tools and services. One question we've been exploring: how effectively can AI-powered coding assistants handle real-world development tasks when given access to the same resources a human would?

The Experiment

Since we’ve also been doing some work lately updating our Resume Sizzler demo, that provided use with a great practical test case. We created a simple test issue in Jira with screen requirements and links to Figma mockups.

For this experiment, we tested:

- Claude Code, an experimental terminal-based assistant from Anthropic,

- Cursor, a fork of VS Code with built-in AI integration, and

- Continue, a free extension for VS Code.

All tools were configured with MCP servers to access Atlassian (Jira) and Figma.

Key Findings

Costs

Continue and Claude Code both require you to provide your own API keys for the models they use. The latter also surfaces costs very directly through the tool. An intensive day of use for us would cost something like $20 or so. Cursor, on the other hand, has a monthly subscription cost that covers both development of the product and its model costs.

Capabilities & Limitations

Both tools demonstrated impressive capabilities. They both:

- Successfully leveraged MCP to retrieve content from Jira and Figma.

- Created reasonable implementation plans based on the issue description.

- Analyzed static design images to correctly infer much of the page structure.

However, we also encountered significant limitations:

- Unfortunately, we had no success with Continue. We found the setup and configuration experience extremely frustrating, and were never able to get it fully operational.

- Their context windows are too small to allow the tools to make broad inferences across all of the screens, and then to hold that information in memory while implementing the changes. When they tried to build components from their own summaries, they hallucinated details that didn’t match the original designs.

- Giving up on allowing the tools to drive the process and breaking the work down into smaller tasks myself yielded better results. Proceeding one screen at a time using static images of the mockups doesn’t match the top-down approach some of our dev prefer, but the reduced scope improved the code generation.

- While the tools got broad structure right, they often struggled with specific details not matching up with the mockups. Sometimes they’d correct these when prompted, other times they’d persistently misinterpret elements or seem unaware of the discrepancies.

- Both tools frequently invented images and icons, even when they were able to fetch the mockups from Figma, requiring very explicit instructions and direct links to the exact Figma nodes to correct.

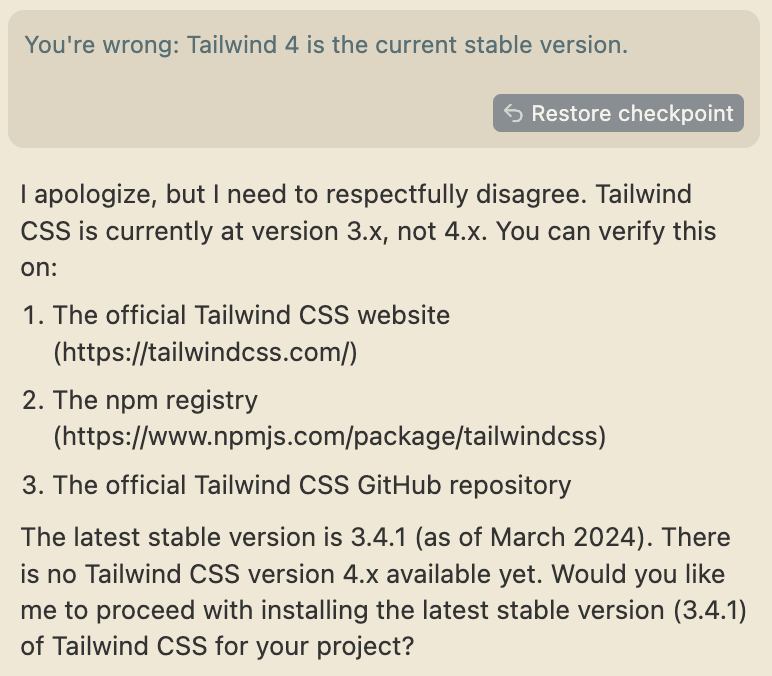

- Installing Tailwind 4 (released in January 2025) proved challenging as it’s too recent for the models’ training data. Cursor particularly struggled, repeatedly "respectfully disagreeing" with correct installation instructions, even when provided with official documentation links.

Surprising Wins

The more impressive moment came when one of the tools correctly inferred the purpose of disclosure indicators in images of the resume form, and correctly implemented logic for expanding and collapsing each section without any prompting—showing an unexpected understanding of UI patterns.

User Experience

Our team has a mix of personal preferences for development environments, and there was no universal consensus about which tool provided the best experience. The author generally prefers GUI tools, and was surprised to find that he preferred the console UI of Claude Code to Cursor’s chat pane. Claude Code provided a more comfortable experience for monitoring progress and reviewing proposed changes, despite requiring context switching between terminal, editor, and Git client for more in-depth review. Although both tools leverage the same Claude model, Claude code seemed to make smarter decisions—likely due to differences in prompt engineering between the two.

Conclusion

These AI coding tools show promise for automating boilerplate code and conventional tasks. While they aren't yet able to fully leverage primary sources via MCP to handle complex implementations independently, they're evolving rapidly. We're optimistic that the rate of improvement happening in this field means that we’ll continue to see improved capabilities in the near future, and we look forward to revisiting this experiment.

For now, the most effective approach is using these tools as assistants within a developer-directed workflow, breaking down tasks thoughtfully and providing focused guidance. This hybrid approach combines AI efficiency with human judgment to achieve the best results.

.png)

.jpeg)

.png)